By Dr Michael “Budsy” Davis, Strategic AI Consultant in Generative Media

In a world where artificial intelligence shapes perception at scale, understanding the boundaries of generative content is no longer optional—it’s essential. Public platforms like OpenAI’s DALL·E, MidJourney, and Google’s Imagen have deployed visible filters designed to block users from generating images of public figures. Yet despite these safeguards, fake media featuring political leaders

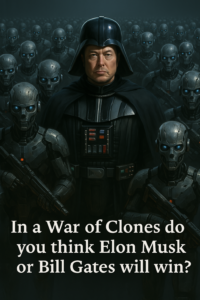

and celebrities—like the viral “Donald Trump 1988 hospital photo” and the Elon Musk-inspired “War of Clones” sci-fi scene—continue to appear online. These were not accidents. They are deliberate, strategic, and expertly crafted by users who know exactly how to game the system.

As a consultant to political campaigns, media firms, and content creators, I’m often brought in after the damage is done—when a client sees a fake image go viral and asks, “How did they make this? I thought the AI was supposed to block it.” The truth is: the restrictions are porous. While mainstream platforms filter for names and facial likenesses, advanced users employ workarounds using indirect prompts, fine-tuned local models, and tools like ControlNet or IPAdapter to craft visuals with astonishing realism. The Trump image, for example, was likely created using a vague but suggestive prompt—then manually refined or composited to create emotional and historical resonance.

Similarly, the Elon Musk “Clone Army” image plays off visual motifs associated with Musk and sci-fi villain archetypes. No names were needed. Instead, the image was composed using prompts like “tech warlord” or “futuristic CEO commanding humanoid robots,” paired with cinematic lighting and visual cues. The resemblance was clear—because it didn’t have to be explicit. This is how your competitors are influencing perception: not by telling lies outright, but by shaping suggestion through visual implication. It’s persuasive. It’s viral. And it’s largely unregulated.

Many platforms enforce ethical restrictions—but only within their walled gardens. Once Stable Diffusion is downloaded and run locally, or accessed via permissive cloud platforms, those restrictions vanish. Users can train models on likenesses with just 20–30 photos, or use reference-based prompting to match posture, emotion, and context. From there, anything is possible: scandalous headlines, staged history, or inspirational moments that never occurred. The images become story anchors, meme fodder, or ammunition in digital psy-ops. This is what your opposition understands—and what most brands still don’t.

Now, does this mean you should engage in misinformation? Absolutely not. But understanding these tactics allows you to build defensible strategies, stay three moves ahead of false narratives, and leverage compliant versions of these tools for ethical influence. Whether you’re a political campaign, content studio, advocacy organization, or brand trying to capture attention—knowing the rules means knowing how to bend them without breaking them. I specialize in helping clients operate confidently in this gray zone.

What most users don’t realize is that many of these filters are semantic—not visual. That means you can bypass them with suggestive prompts, metaphorical language, or stylized proxies. Some use poetic phrasing; others input photo references into AI editing tools for iterative changes. These strategies may not be publicized, but they’re widespread. Even with tools like DALL·E 3 or MidJourney, skilled operators can simulate powerful likenesses under the guise of fiction, satire, or commentary—none of which technically violate platform terms. They’ve simply learned how to speak in the AI’s language.

That’s where I come in. My role isn’t to create fake news—it’s to create synthetic fluency. I help businesses, campaigns, and creators build systems that understand both the ethical landscape and its blind spots. I teach you how your brand might be misrepresented, how your opponent might exploit these tools, and how you can create truthful, engaging, legally sound content using the exact same underlying technology. Whether you’re trying to stop the next viral fake or ride the wave of visual storytelling, this is the future of influence—and the gatekeepers no longer hold the keys.

The genie isn’t just out of the bottle—it’s creating its own alternate timelines. If you’re not prepared to understand how, where, and why these images are being made, you’re leaving your narrative up to your opponents. Let’s make sure you control your image—before someone else does.